There’s no denying defensive metrics are controversial. Whether they clash with what you’ve seen with your own eyes, or you just don’t believe them, it seems like everyone has some sort of opinion to offer on their validity.

On FanGraphs, we carry no less than four different defensive metrics:

UZR – Mitchel Lichtman’s Ultimate Zone Rating

DRS – John Dewan’s Defensive Runs Saved

TZL – Rally’s Total Zone (location based version)

TZ – Rally’s Total Zone (standard version)

There’s no denying that we use some more frequently than others (cough, UZR), but the reason we have all four is because it’s great to see what different data sets and different models spit out. And In addition to the four, there’s also a fifth completely unrelated metric in the Fans Scouts Report that is run each and every year on insidethebook.com by Tangotiger.

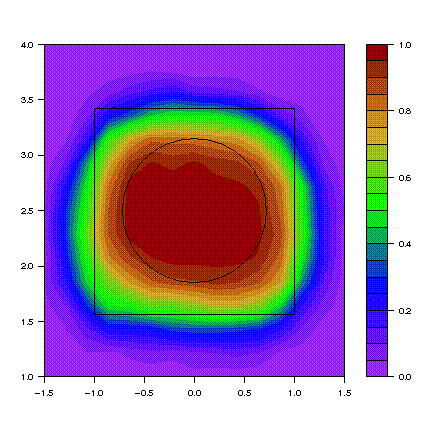

It’s important to note that all these defensive metrics are not on the same scale, so it’s difficult to glance at all four (five if you use the Fans Scouting Report) and get a good sense if they’re in agreement or not. Which brings me to the preliminary look at the Aggregate Defensive Evaluations, where each metric is put on the same scale for each position, averaged, and then a standard deviation is computed for each player. Here are the 2009 Shortstops (min 82 games played):

As you can see, Paul Janish and Brendan Ryan are the clear leaders atop the list and even all the metrics are for the most part in agreement. +/- 5 runs in either direction is still going to make them elite defenders.

And there are players like Yunel Escobar who is considered by Total Zone and DRS to be very good, but by UZR and the Fans to be more or less average. On an aggregate level he still ends up as very good, though there is a good amount of disagreement as to just how good he is, even if no system thinks he’s below average.

All in all, it should be easy to go up and down the list and see which players there’s a high level of confidence about defensively, and which there is not.

From a mere computational standpoint, is this the best way to go about combining defensive metrics? I’m really not sure and it’s certainly worth looking into further. There are a lot of options in weighting the metrics differently and how to scale them, but overall I feel this is at least a decent start and something I hope to delve into a bit more.

The point here is that there’s a lot of information in these metrics with so many models out there it’s becoming increasingly important to try and identify what we’re fairly confident about and what we’re not so confident about instead of making the mistake of throwing them all away.